728x90

반응형

4.2. The Image Classification Dataset — Dive into Deep Learning 1.0.3 documentation

d2l.ai

[1]

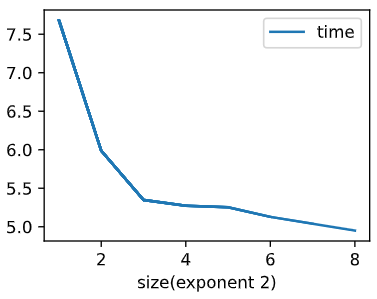

Usually batch_size's are formed as $2^n$ from, we can inspect the run time of loading the dataset when batch_size=$2^n$.

ls=[(2*i)**2 for i in range(1, 9)]

t_info = []

for size in ls:

data = FashionMNIST(resize=(32, 32), batch_size=size)

tic = time.time()

for X, y in data.train_dataloader():

continue

t_info.append(time.time() - tic)

class ProgressBoard(d2l.HyperParameters): #@save

"""The board that plots data points in animation."""

def __init__(self, xlabel=None, ylabel=None, xlim=None,

ylim=None, xscale='linear', yscale='linear',

ls=['-', '--', '-.', ':'], colors=['C0', 'C1', 'C2', 'C3'],

fig=None, axes=None, figsize=(3.5, 2.5), display=True):

self.save_hyperparameters()

def draw(self, x, y, label, every_n=1):

raise NotImplemented

import numpy as np

board = d2l.ProgressBoard('size(exponent 2)')

for x in np.arange(1, 9):

board.draw(x, t_info[x-1], 'time', every_n=1)

result >>

We can see that the loading time decreases as the batch_size gets bigger.

[2]

Maybe something to do with the hardware.

[3]

Datasets — Torchvision 0.17 documentation

Shortcuts

pytorch.org

728x90

반응형

'ML&DL > Dive into Deep Learning' 카테고리의 다른 글

| [4.4.7] Dive into Deep Learning : exercise answers (0) | 2024.03.03 |

|---|---|

| [4.3.4] Dive into Deep Learning : exercise answers (0) | 2024.03.03 |

| [4.1.5] Dive into Deep Learning : exercise answers (1) | 2024.02.25 |

| [3.7.6] Dive into Deep Learning : exercise answers (1) | 2024.02.15 |

| [3.6.5] Dive into Deep Learning : exercise answers (0) | 2024.02.15 |